How many lights are too many lights in a scene? What if they are dynamic? What if we want them all to cast shadows? This question was answered in a recent paper from the University of Google Search and reveals that you can't have that many. It requires a lot of very clever rendering tricks (that for some reason always involve baking) but basically not all of those lights will cast shadows, some might light some objects in the scene and miss others, some might just look like a light and in the end emit no light at all and also there's light leaking and other issues, careful scene tuning is needed to make everything look good.

Can we do better? Can we free ourselves of these shackles and run freely on the prairie of physically correct dynamic lighting? Can we have our cake and light it correctly while we eat it? The answers to these questions is a resounding YES. How though? Well, we will rely on path tracing because that's what we do here. Wait a second, isn't that like crazy slow? Don't throw that cake at me just yet, we will do it all and at interactive frame rates. Bear with me while I explain how to get to the solution. This post builds up on the idea of Direct Lighting, also called Next Event Estimation. You can check Peter Shirley's blog for an excellent explanation. [1]

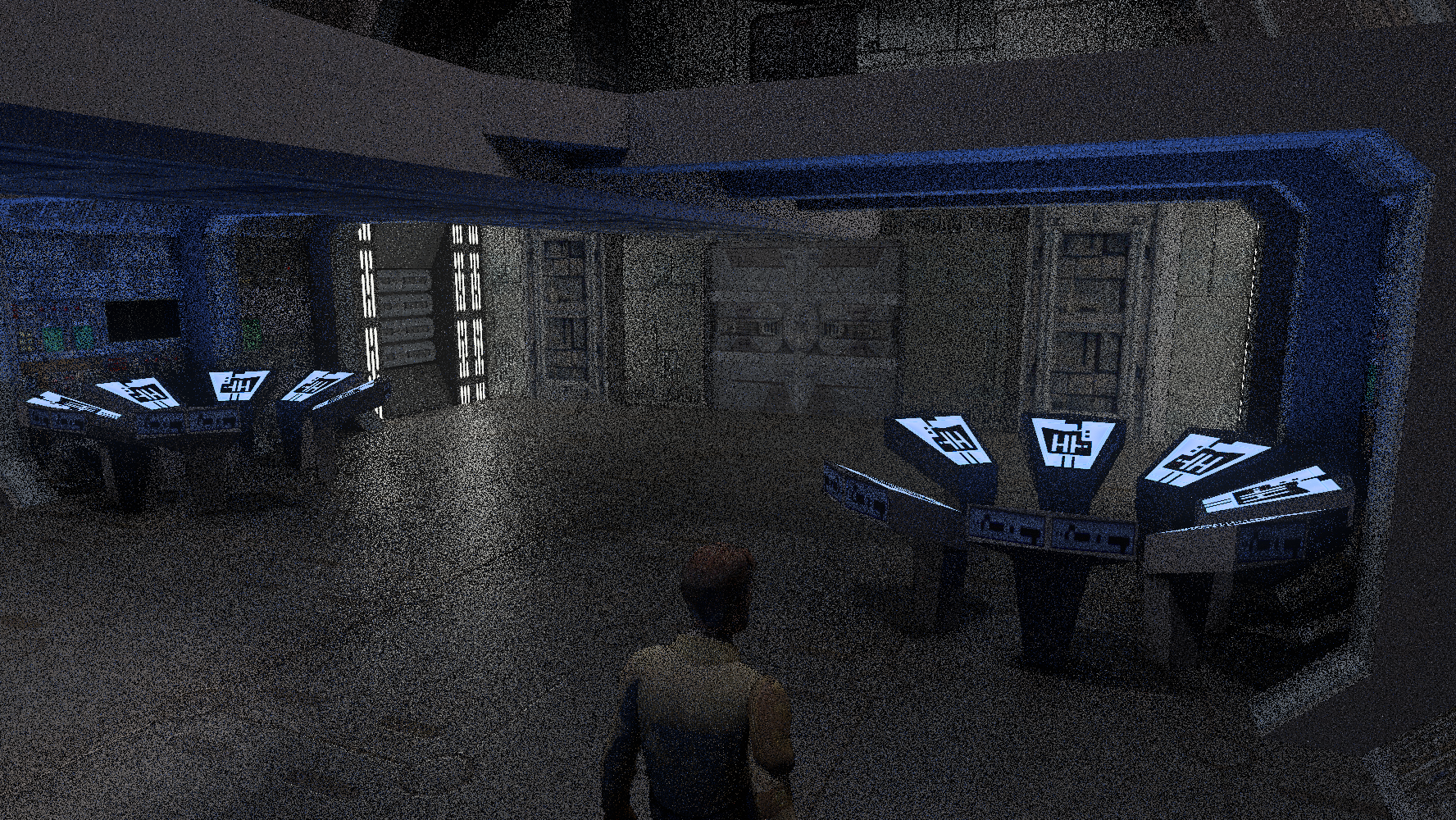

This is the scene we will be working with. This image was rendered using 2,000 samples and the whole map has 6025 lights (I have not added them all yet, there will be more), of course this render took several seconds. How are we going to render this scene at interactive frame rates, like 60 FPS or higher (20 FPS if you grew up playing N64)? It sounds like a nightmare, but do not despair. One of the very first things that we can do is create smaller lists of lights based on which area within the map they are in and what other areas they could potentially light. Like knowing which room a light is in and which other rooms this room is connected to. Doing that we know this area has 222 possible lights. It still looks like an unwieldy number but we have some options, let's explore some.

We could simply use them all at each pixel, calculate their contribution and add them all up, of course we can skip many of them that are facing the opposite direction of a surface or that are behind said surface. However we'd still end up with quite a big number and calculating the contribution of each light would definitely bring the GPU down to its knees, the coil whine would be unbearable. On top of that there is another problem, what if some lights are behind a door or on the other side of a wall or occluded by an object? If we ignore this issue we will end up with light leaking:

Can you notice how there is light coming from who knows where? Seems to come from under the walls, from under the door, shadows are gone, definitely looks really bad ... and familiar if you've been gaming for a while ... how do we tackle this? We use what are called shadow rays to test for visibility.

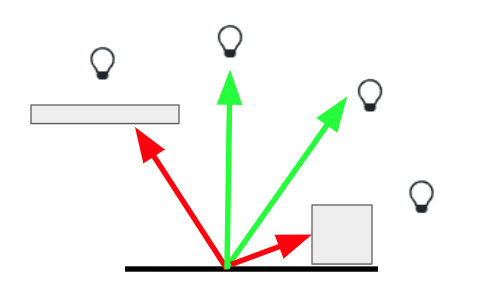

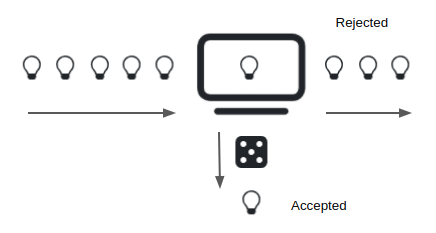

What we do is we trace a ray from the pixel we want to light toward a point in the light. If it doesn't intersect anything we can consider that the light is visible and we can confidently calculate that light's contribution. If the shadow ray intersects something, well, it is not visible (occluded) and we ignore that light. So going back to our idea of using all lights we'd have to trace hundreds of shadow rays, one for each light. That would yield very good results however tracing rays is a very expensive operation, we don't get that many per pixel, so kiss the interactive frame rate good bye. Unfortunately our idea is a no go but we've gathered an important observation, we definitely need shadow rays, and since they are so expensive we can only check visibility for one or two lights if we want to keep a good frame rate.

Given that, we could select one light at random, use our very valuable shadow ray to check for visibility and if everything is ok, we calculate that light's contribution. How does that look? Here it is:

Ok, it looks pretty noisy, dark and far from good, but hey, frame rate is good. Hmmm, can we use that shadow ray in a smarter way? Yes, look at the scene again.

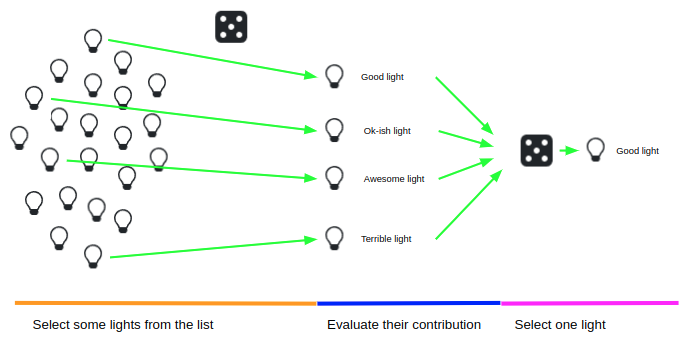

I've highlighted two areas (red and blue squares), it's clear that each is illuminated by different lights, that there are more important, influential lights for each pixel, if we select those we will get better results. That technique is called Resampled Importance Sampling (RIS) [2]

Don't let the name scare you, we can make it work for us as follows: 1) we randomly select some lights from our list since we can't process them all, we call them samples, 2) run them through a function that closely resembles the light contribution on our pixel, which as you might imagine will help us select those lights that contribute more, and using that information 3) select one sample at random and trace our shadow ray, selection will favor those lights that contribute more to the pixel. Here's the result:

Much better, right? And actually this is the technique that Q2RTX uses [3], and to be honest that game's visuals are astonishing. We could stop here, I mean, we have what we wanted, physically based lighting with hundreds of lights running at an interactive frame rate. However it is still somewhat noisy and we want to handle even more lights, Jedi Outcast has waaaay more lights than Quake 2.

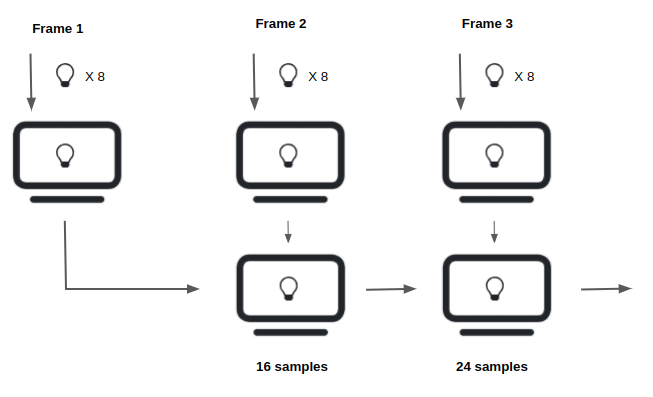

How can we do that? Think about what happens with RIS, in one frame it might find an awesome light and maybe the next frame it selects a not so good one and maybe the next frame it selects a terrible light, all of that brings in noise. Could we somehow reuse those samples from previous frames to improve the overall quality? Yes, we can use Reservoir-based Spatio-Temporal Importance Resampling (ReSTIR)[4].

Ohh noes, another acronym and this one looks scarier! Don't sweat it, this works by sampling elements from a stream. Wait ... what?!?! Think of it as a streaming service like Netflix, whenever you watch a movie your TV is downloading some frames ahead of what you are watching and once presented they are discarded (yes, I know I'm oversimplifying, but bear with me), your TV doesn't store the whole movie. In the same manner, we can think of a reservoir as the TV and those RIS light samples as frames in the stream. As we stream a sample through our reservoir, the reservoir randomly keeps it or discards it, it only holds one sample along with how many samples it has seen and all the probabilities and weights that are needed to properly use our current sample. So we can stream our RIS samples through the reservoir and have the same result as using RIS, at this point you might feel cheated, like we are accomplishing the same with extra steps, but no, reservoirs hold a super power, they can be combined.

When we combine them the resulting reservoir can be considered as if it had all the samples of the previous reservoirs streamed through it. How is this good for us? Let's go back to our pixel in the scene, we create a reservoir, stream RIS light samples and then save that reservoir, on the next frame, we create a new reservoir, stream more samples but this time we can combine the previous frame's reservoir and the result is a reservoir with twice the samples! Next frame, even more samples and so on and so forth, and if we keep doing this we end up with a reservoir that has a very high quality sample. Here's the result of doing this:

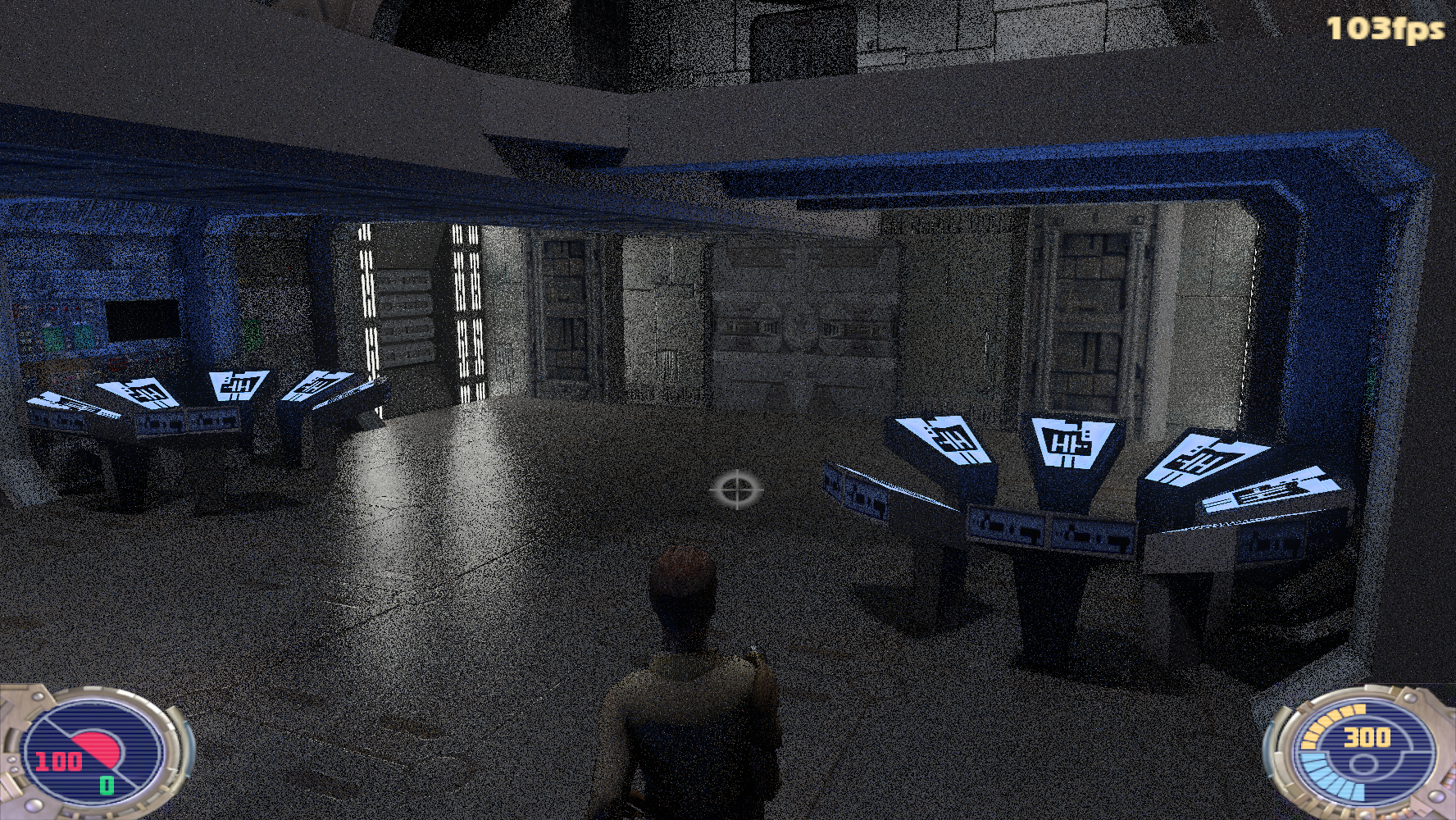

Much, much better, but we can do even better. We could combine not only the reservoir of the previous frame but also those of the neighboring pixels, like copying somebody else's homework, just like in the old days. Those neighbors might have found a better light and we want that info. This can really get crazy as those neighbors' reservoirs will have also previous frames info. So in just a few frames each pixel ends up with thousands of samples, good stuff. Note: Care must be taken when asking a neighboring pixel for its reservoir as that pixel might be pointing in another direction or be at another dept, if you disregard these differences you might end up sampling lights that have nothing to do with the current pixel and all sorts of evil things will be unleashed upon your frames, bias will overtake, pixels will go on strike, the ghost of NAN past will visit you, just be careful. Anyway, here's the result of asking neighboring pixels for their homework:

This looks pretty good, noise has been greatly reduced and we got everything we wanted, a bunch of lights correctly lighting a scene running at interactive frame rates using path tracing, even shadows are there, interestingly this can be achieved using just one shadow ray per pixel.

Are you ready to use ReSTIR? Check the page linked below[4], bunch of math and very important details that I left out are there. Don't feel like reading all that and want the goods? RTXDI uses this same principle so go check that out.[5]

We've talked about direct lighting and how to greatly improve it, however we need indirect lighting as well, can we use a similar technique? Stay tuned for a future post.

Footnotes

[1] What is direct lighting (next event estimation) in a ray tracer?

[2] Importance Resampling for Global Illumination

[3] Ray Tracing Gems II, Chapter 47

[4] Spatiotemporal reservoir resampling for real-time ray tracing with dynamic direct lighting