When it comes to multi-bounce global illumination (GI) how important is it to choose the best direction to shoot rays? Since in real-time rendering we have a tight ray budget, we must make the most out of those rays. In this post I'm aiming at describing an idea to improve the efficiency of said rays, specifically when sampling the specular lobe of rough materials. I'm basing my ideas on an algorithm called ReSTIR GI [1] , I'll summarize some concepts of said paper in the next paragraphs and build on top of that. BTW, I'll be using some other concepts like RIS and ReSTIR, if you are not familiar with them, you can check [3], an article where I introduce said concepts.

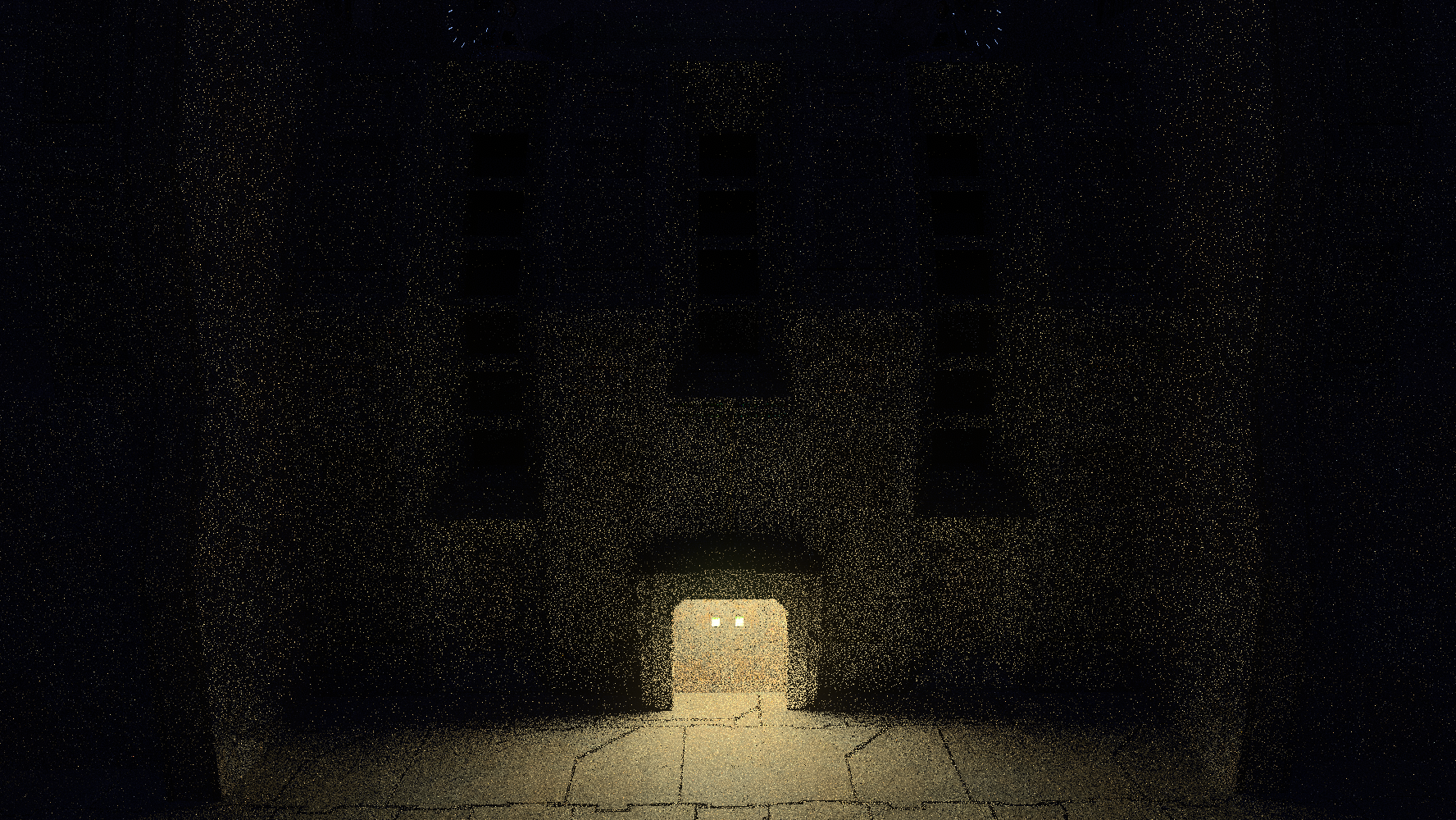

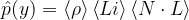

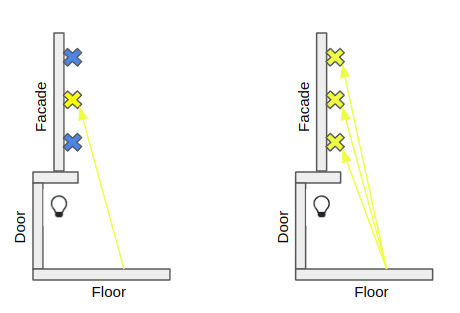

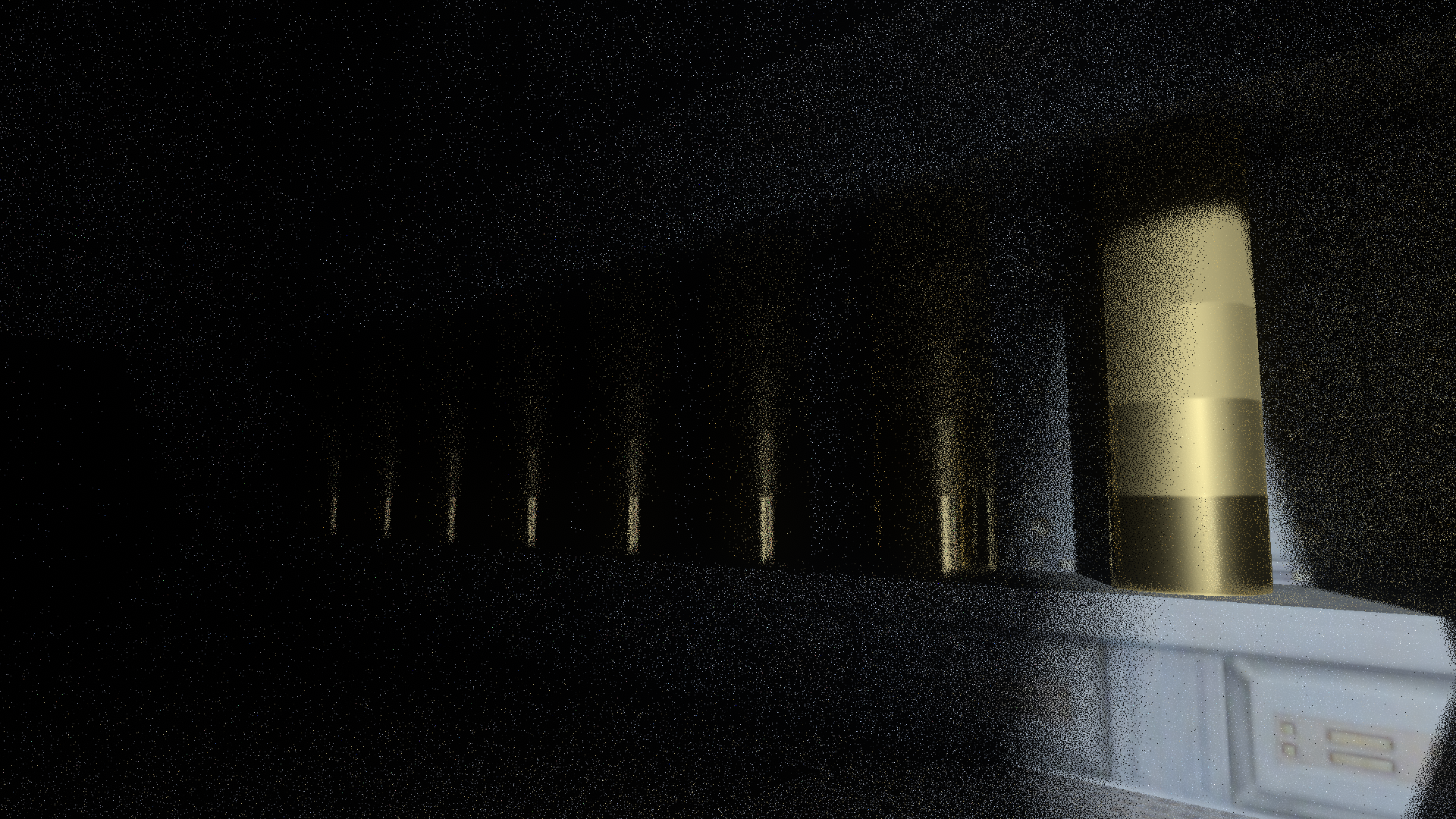

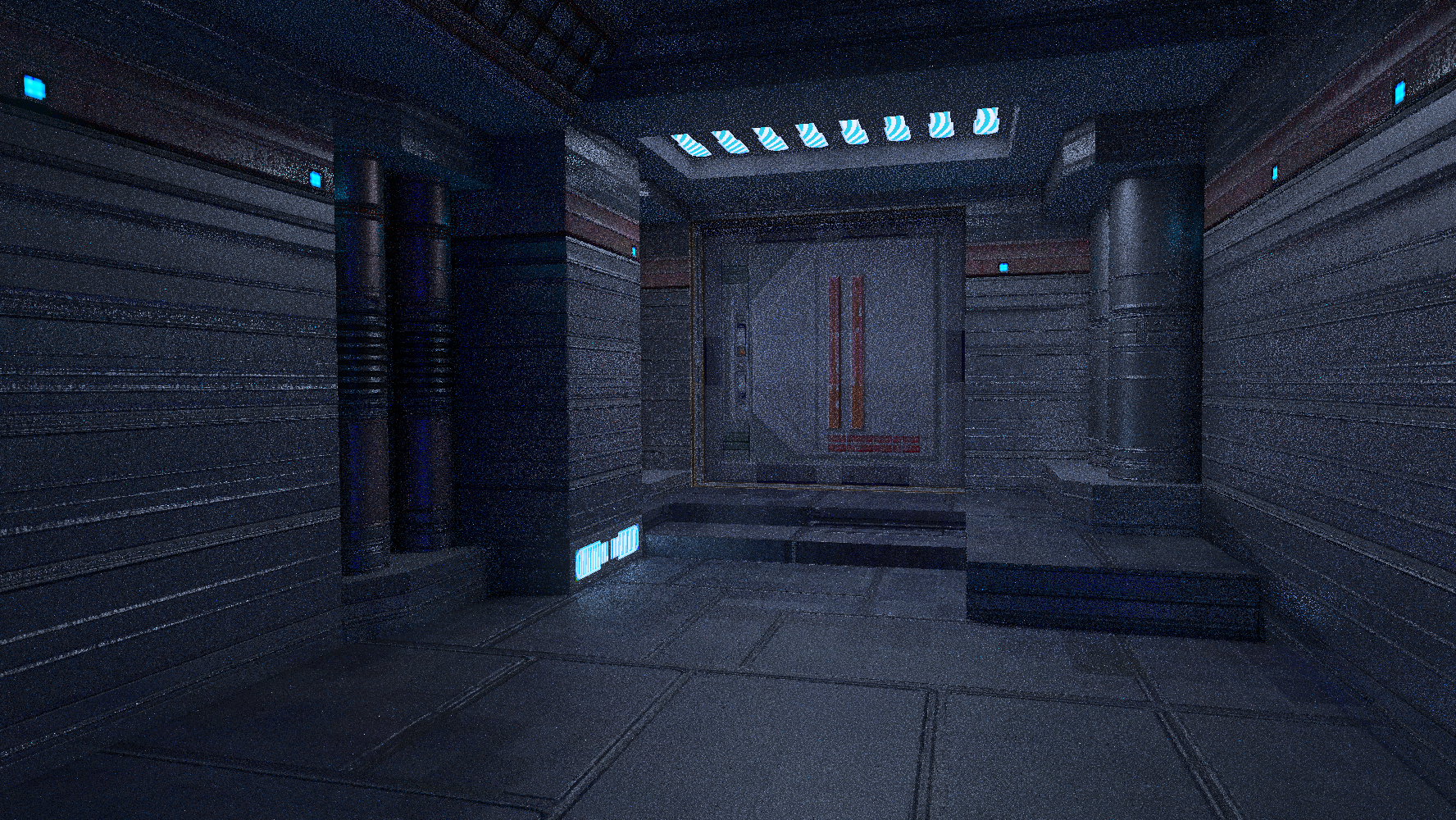

This is the scene I'll be using first to explain ReSTIR GI. There are two bright lamps on top of that door and illumination to the building facade comes from the light reflected off the floor.

Path tracing dictates that when it comes to deciding where to direct a bounce ray what we usually do is either select the specular or diffuse lobe and importance sample that BRDF and shoot a ray, right now we will focus only on the diffuse lobe. If we hit a surface we calculate the effect said bounce would have on light and then we shoot another ray from that surface following the same procedure, so on and so forth until we hit a light or we decide we had enough and terminate that path and start all over again. How effective is that?

Doesn't look very promising. To improve things, we can use Next Event Estimation, that is, at each bounce we look for a light, calculate its contribution and propagate that back through our path. How does that look?

Better, but still every time we sample the diffuse lobe the ray can go pretty much in any direction, the sky, the mountain in front of the building, some other part of the building and we don't get a whole lot light from those bounces, if any at all. Even if the ray goes in the right direction, that is the floor, and we get a good amount of light, the next frame will end up following a different path and maybe not getting a whole lot of light back. Could we reuse a path in a future frame if we found it has a good amount of light? Even better, could we share that info with some neighboring pixels so they could also follow that path? Of course we can if we use ReSTIR[2][3].

Unlike original ReSTIR, we won't be sampling and storing lights, we will store paths, and I can't emphasize this enough, we will be dealing with lambertian diffuse scattering, I'll explain why later on.

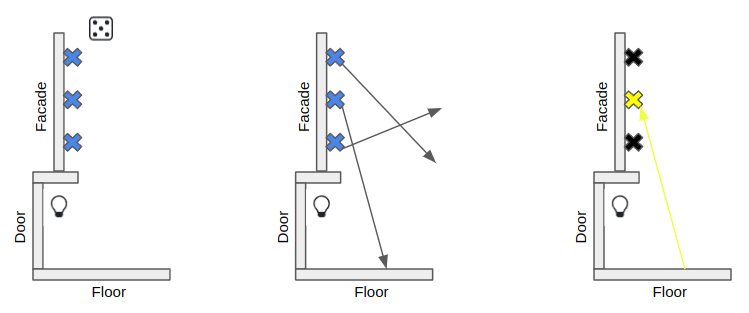

So we generate a new sample by 1) Sample the lambertian diffuse BRDF to get a direction 2) Shoot a ray in said direction 3) Get the outgoing radiance at the surface we just hit (Lo) .

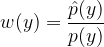

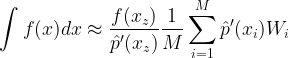

That's it, we just need to come up with a suitable RIS weight that can represent our path and that we can stream through or reservoir, a RIS weight looks like this:

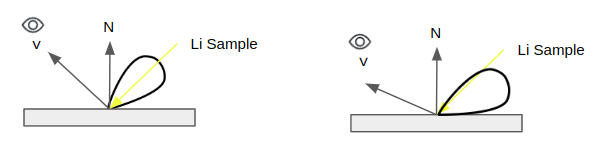

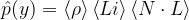

What do we plug where? p(y) can be the PDF of sampling our BRDF, whether it is uniform sampling or cosine-weighted sampling. p̂(y) can be:

Where ρ is the BRDF, Li is the incoming radiance from our sample (We call it Lo when leaving the surface we hit), N is the normal and L is the sample direction vector.

Now that we have our sample, we can stream it in our reservoir and enjoy all the ReSTIR goodness, temporal and spatial reuse.

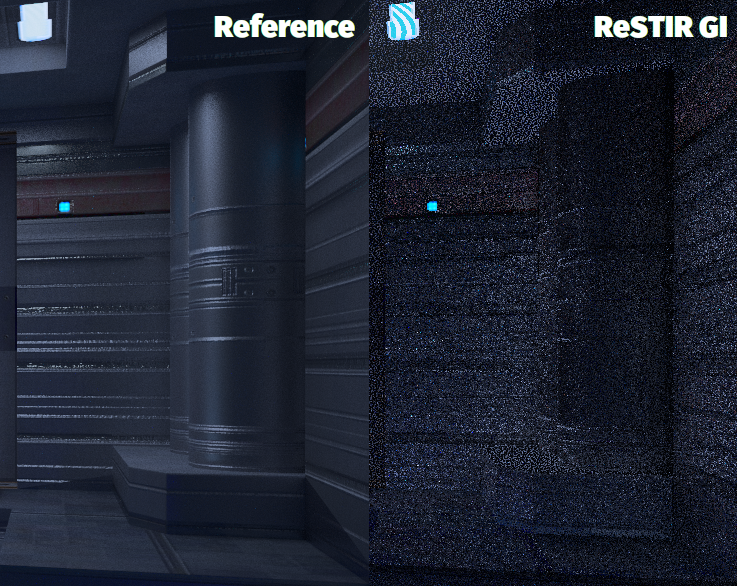

What are the results?

Much better, it's like pixels know that they should be shooting rays at the floor. Care must be taken when performing spatial reuse, you will need to calculate a Jacobian determinant to account for the geometric differences, said equation and a great explanation is in the ReSTIR GI Paper, section 4.3 [1].

Why did I begin with Lambertian? Think about it, the View vector has no influence in our samples, we can move the camera around and everything, reservoirs, weights, paths, all stay the same, reusing paths is easy. No stress.

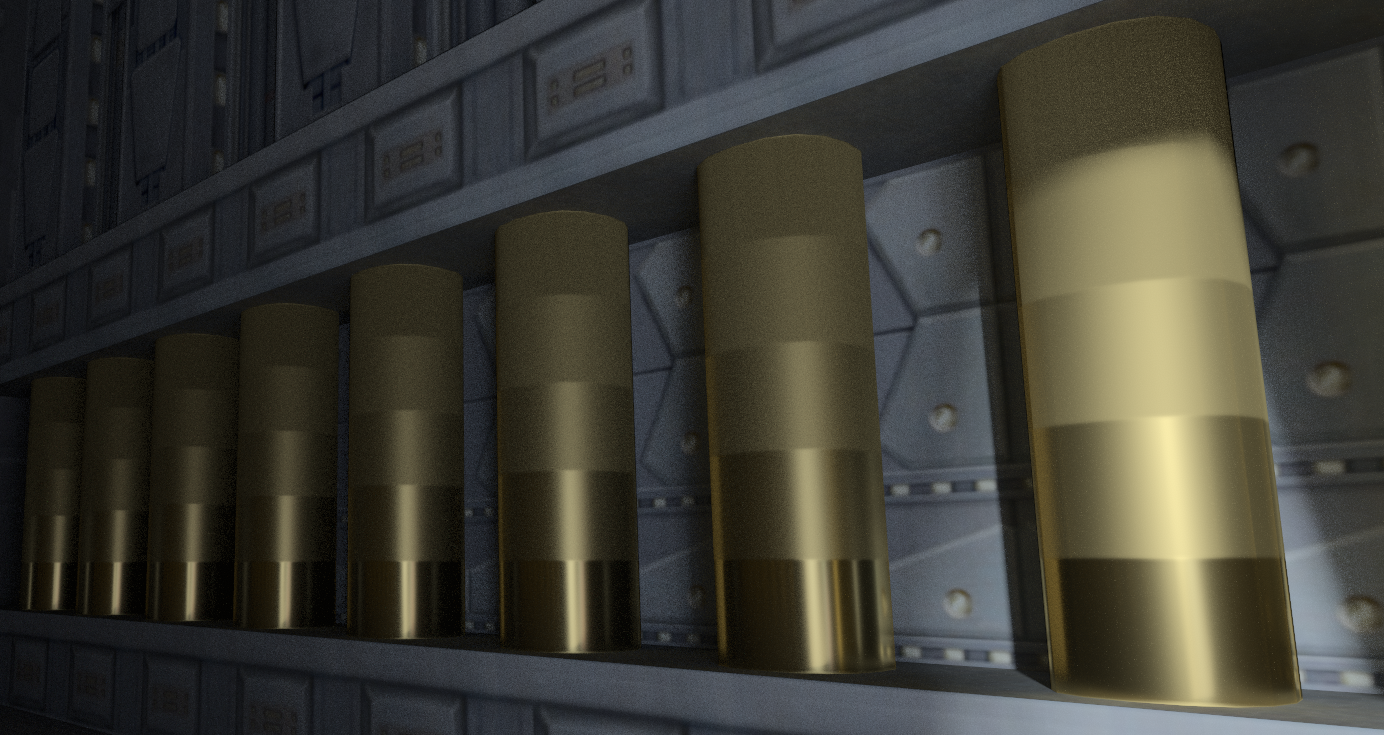

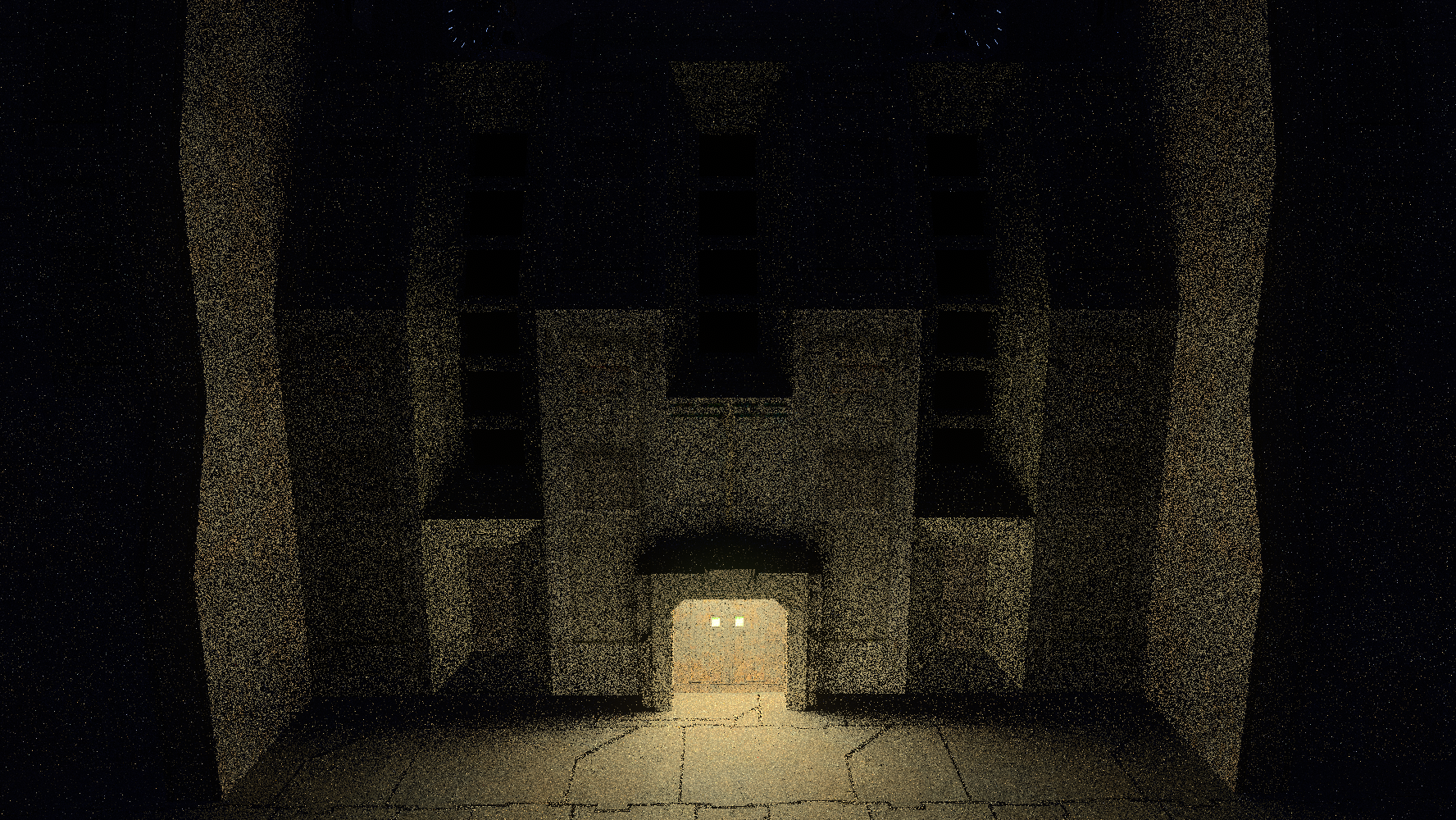

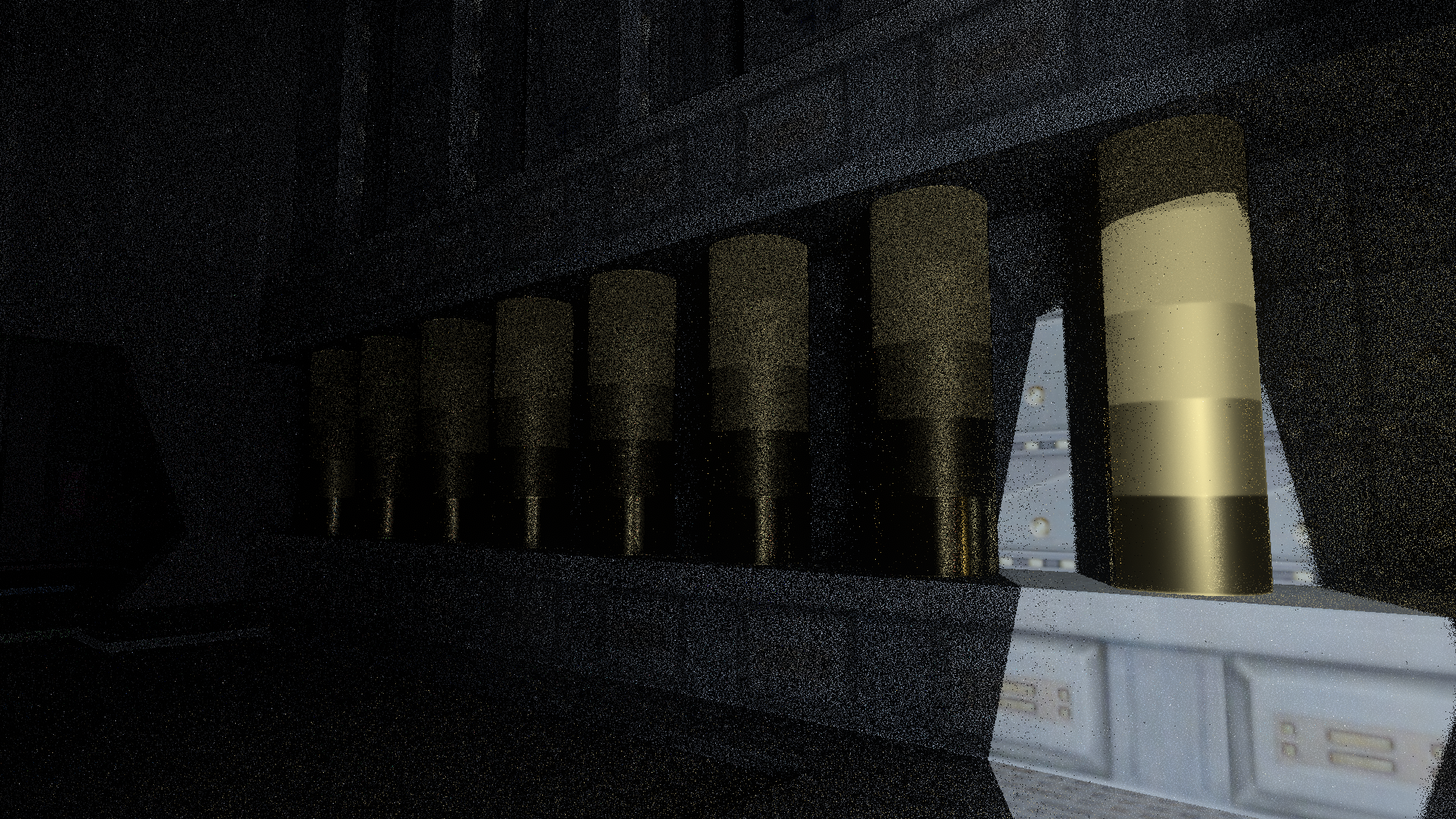

Specular could benefit from ReSTIR GI, even more so when dealing with low specularity, aka rough materials that have wider/bigger specular lobes and can have rays going on more directions. The next scene is what we will be working with:

100% metallic pillars, gold, rings of different roughness (20%, 40%, 60%, 80%, 100%). Light source is behind the camera and is partially occluded by a wall, so those pillars are lit indirectly.

Here's what happens when we combine BRDF sampling and NEE as we did earlier.

Not great results, can't we just use ReSTIR GI as we did before? Specular is more problematic, it is influenced by the View vector. You have a lot of light coming from one direction and then you change the view vector and it can drastically change, even go to zero, how bad can that be? Take a look again at our RIS weight:

We could plug the same equations, namely p(y) can be the PDF of sampling our specular BRDF and p̂(y) can be (ρ)(Li)(N.L) as we did before, however both equations are dependent on the View vector. Change it and both equations yield completely different results. So how do we tackle that? Do we reweigh our temporal reservoirs once the camera has moved a little bit? Do we discard them and start all over? If we constantly discard reservoirs, why bother with ReSTIR then? How do we resample spatially since the view vector is going to be slightly different for each reservoir? Do we need another jacobian?

My head hurts, stupid view vector, I guess we could tackle these issues head on and concoct some clever math or ... dance around it all. Guess which one I've chosen, so put on your dancing shoes because that's how we will do it.

One important characteristic that RIS has is that it can be used iteratively [4], so we can begin by having a sub-optimal p(y) that we use to sample an ok-ish p̂(y) and in turn we can use that to sample a better p̂'(y), so on and so forth. Also we can use a cheaper function as p̂(y), something like Phong instead of GGX. We can use these ideas to defang the view vector.

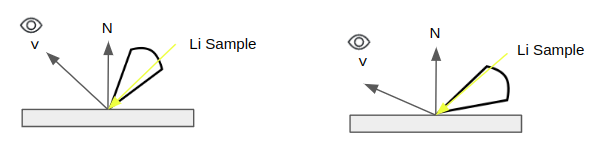

Let's begin by choosing a sub-optimal p(y), we need a sampling strategy whose PDF value remains the same despite the View vector changing, and that super-power belongs to the humble uniform sampling. Wait, what? Yes, if we uniform sample the specular lobe the view vector can move around and p(y) of our sample will not change, provided said sample is within the specular lobe. This is great even for spatial resampling since the view vector will be different at neighboring pixels. Where can we get such an odd tool? I came up with a strategy to uniform sample a specular lobe [5]. Now that post makes sense.

We have a p(y) that doesn't change but p̂(y) still does, can we come up with another function that is not affected as much by the view vector? Let's look at p̂(y) again:

It is only the BRDF part that changes when the view vector changes, neither N.L nor Li do. Hmmm, the ReSTIR GI paper suggests using Li as p̂(y), of course it is a sub-optimal function but it is unaffected by the view vector and even by the current surface normal so it will get the job done. Plugging that simple p̂(y) into our RIS weight means that our reservoirs do not change when the view vector changes.

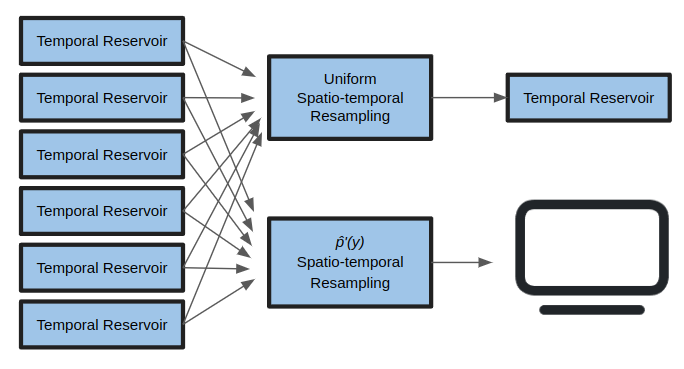

So spatio-temporal resampling is much easier since the view vector has less impact on our reservoirs, we will call these uniform reservoirs. Of course we must check if the sample is in the specular lobe, if not then we reject it, I know I've written this like 3 times, it's very very important.

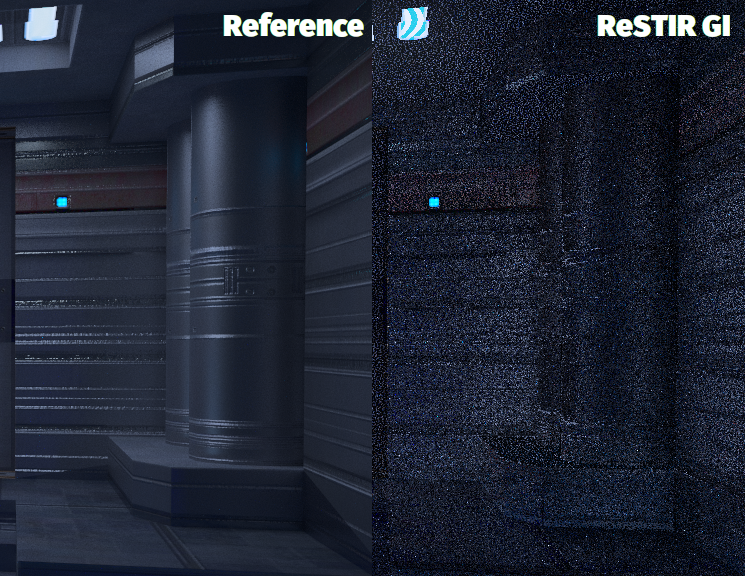

Does it all even work? Here's the result.

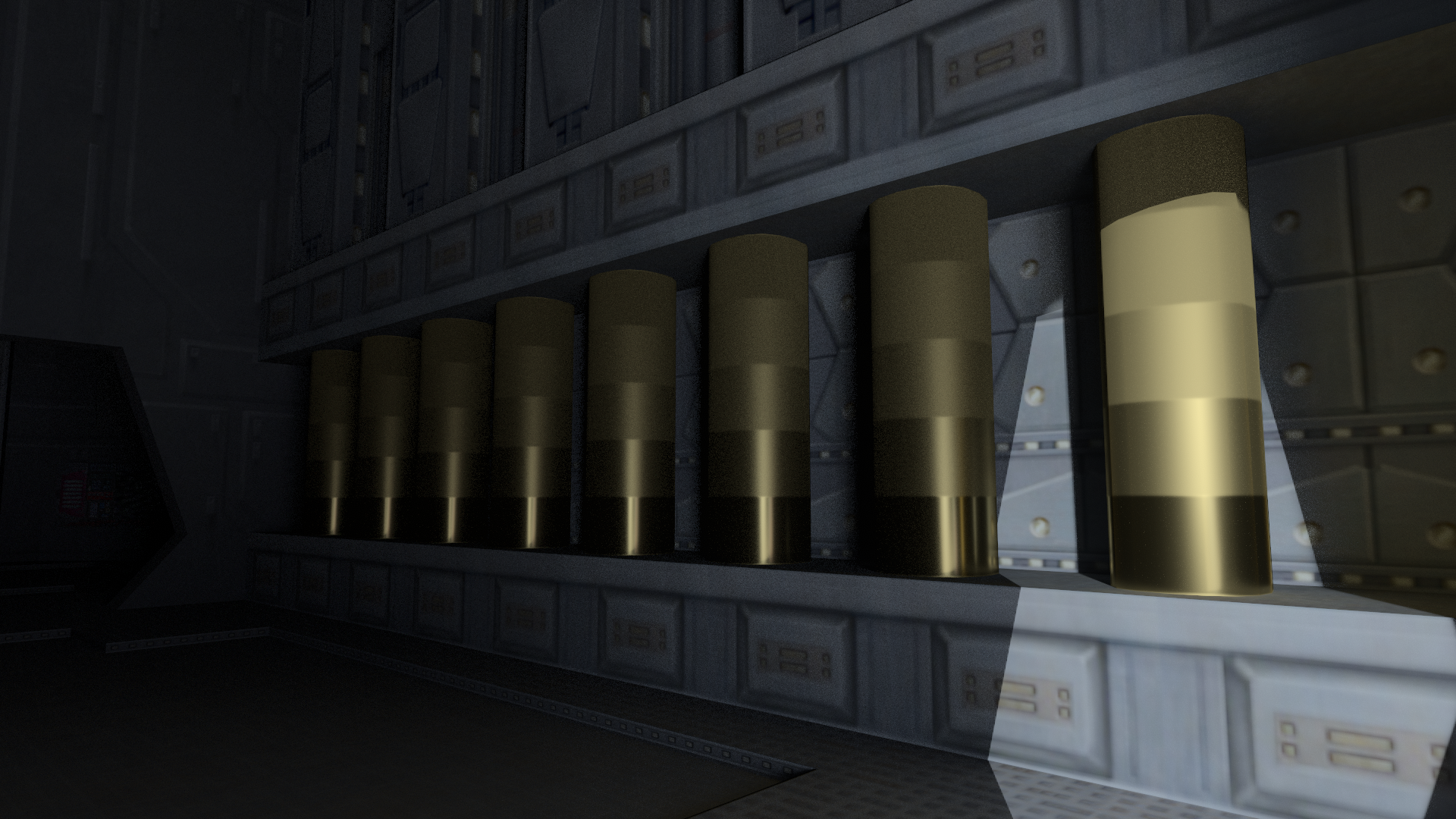

Not bad, are we done? Not yet, both functions are sub-optimal since they will over-sample the edge of the specular lobe and under-sample the center bringing in some bias, the previous image doesn't really show it, but I've got a new one and this is how bias looks like:

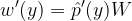

At the right of the image I just have indirect illumination showing. You can notice how the reflections on the pipes are somewhat gone, they are under sampled. How do we fix that? We can use RIS iteratively and sample a better p̂'(y) using our uniform samples. This new p̂'(y) function could use a real specular BRDF and N.L, the RIS weight would look like this:

Where p̂'(y) is our new function with a specular BRDF, W is the Weight from a uniform reservoir. Having the new RIS weight, we can stream it through a new reservoir and the following would be our estimator:

Where p̂'(Xi) is our new function with a specular BRDF, Xi is the sample in our uniform reservoir, Wi is the Weight from our uniform reservoir.

The thing is that we can't just use this new RIS weight in all our reservoirs and store it, this would return the effects of the dreaded view vector. How can we use it then? When performing the spatio-temporal resampling we could use two reservoirs, one where we just use our sub-optimal p̂(y) and which we will store for future use, and another one, a disposable one where we sample our better p̂'(y), this last one we'd use when shading.

Here are the results:

Reflections look much better.

My implementation uses separate reservoirs for diffuse and specular lobes, they are selected at random with a 50% chance, unless the material is 100% metal, if so it always goes specular. If the temporal reservoir is valid then it randomly decides to refresh that reservoir or to get a new sample, the result is used to calculate light contribution and throughput. After that spatio-temporal resampling happens and the result is stored for the next frame. I'm not using ReSTIR GI for materials with high specularity, nothing below 25% rough, there I just use BRDF sampling. When a sample runs into a light, I shade the result and discard it, there is no point on saving the reservoir, ReSTIR DI takes care of direct illumination quite well. You'll notice that this is a pretty watered down implementation compared to the one described in the ReSTIR GI paper, of course that brings in noise but I'm trying to keep interactive frame rates and I'm a hack.

For future work I've been thinking on testing this algorithm on a more serious renderer, maybe Falcor. Also there might be value on resampling specular reservoirs when dealing with the diffuse lobe, which could be particularly helpful on disocclusion.

ReSTIR GI works on screen space, how could we connect to more interesting paths that are off-screen? I have ideas, stay tuned.

Footnotes

[1] ReSTIR GI: Path Resampling for Real-Time Path Tracing

[2] Spatiotemporal reservoir resampling for real-time ray tracing with dynamic direct lighting

[3] How to add thousands of lights to your renderer and not die in the process

[4] Part 1: Rendering Games With Millions of Ray Traced Lights